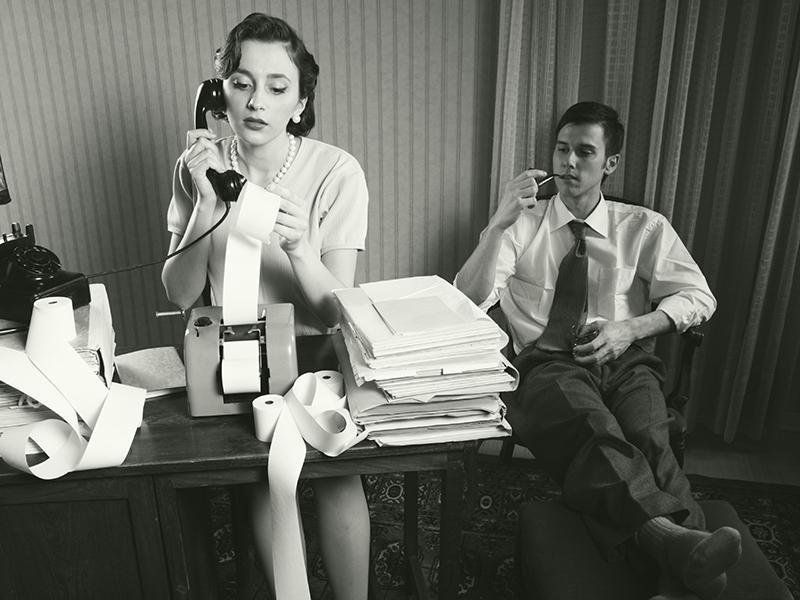

The acceleration of artificial intelligence development is quickly redefining the workplace while spurring innovation and growth around the sharing and use of information. If our roles in higher education are to prepare students for the reframed workplace, we must equip them with the AI skills their employers will expect. From our experiences of teaching decision-making in a digital context, our students’ use of AI raises concerns for their development as ethical decision-makers.

What should educators consider when confronted with changing digital technologies?

How AI is dividing higher education institutions

A positive spin on AI writers such as ChatGPT is that such tools can assist students in producing more effective and clearer writing. A contrasting view is that AI makes cheating easier and threatens academic integrity. Consequently, departments are divided on how to deal with the challenges that AI presents to higher education institutions (HEIs). One challenge is that by “letting the computer do it”, the student undermines their own learning as well as fakes the evidence of their learning, which subverts academic integrity. With the detection of machine-generated but distinct responses near impossible, Australian universities are returning to handwritten examinations to prevent this misrepresentation.

- Collection: AI transformers like ChatGPT are here, so what next?

- Strengthening academic integrity requires action from students and teachers alike

- How to teach critical thinking to beginners

However, using a practice-based learning approach may instead help students better engage with AI in their future workplace. For example, to emulate the use of AI in the workplace, you might provide students with generated output for critical evaluation, further development and contextualisation.

Teaching digital ethics could be a solution

AI may provide many opportunities for enhancing practice in higher education, but ethical implications and risks also arise from the use of digital technologies.

In 2009, Rafael Capurro described digital ethics as the impact of information and communication technology on society in consideration of what is socially and morally acceptable. In our teaching of digital ethics, we emphasise the idea of doing what is right, not what you can get away with. Rather than prohibiting the use of AI tools, educators must ensure that students understand how to use them responsibly. Teaching digital ethics encourages critical thinking and a principled and effective use of technology. For example, students might be provided with a scenario exploring the ethical dilemmas for artistic authorship presented by AI-generated art for deep discussion in class. By engaging the student with the philosophical questions of digital ethics, we can bridge the gap between technological innovations and socially accepted values.

This discussion should be framed around the need for responsible use that enhances student learning as well as their understanding of the constraints of AI.

Responsible use requires the user to consider the quality of the material used by the AI to produce its result. Another issue is whether the tool properly addresses data privacy, intellectual property and copyright concerns. As digital citizens, users also need to use AI tools responsibly and respectfully. The user is accountable for their own work, in their role as both a student and future professional. They also need to be transparent in using these tools.

Passive engagement with AI tools (accepting their results without care or critical thought) is unethical. For example, a student misrepresenting the raw results from an AI tool as their own work is not acting as a responsible user. As a responsible user, the student must know and evaluate the sources of the information they access. Doing so requires the student to build their higher-order thinking skills.

Developing good digital citizens

The development of critical thinking skills is crucial for students using AI tools. Educators must rethink their approach to increase students’ awareness of the role of their “electronic colleague”. This requires that students use digital technologies responsibly to complement their skills, not replace them. The digitally ethical user needs to know how to ask questions of the AI to correctly elicit usable responses that they understand. For example, rather than asking a single complex question, the user should engage in an interactive conversation with their electronic colleague using a series of focused questions.

Students must also recognise the constraints of these tools, which are only as effective as the source material used to train them. Famously, the initial trial release of ChatGPT only uses source material up to 2021; it does not encompass more recent events. Additionally, AI tools often perpetuate bias, as illustrated by Amazon’s recruiting algorithm and the use of AI in the US healthcare system. This bias arises because the AI model assigns a probability distribution over a sequence of words using the source material to create its response. For the same reason, the apparent confidence of the AI in its own response is outstanding but misplaced – an AI with Dunning-Kruger effect.

A responsible professional cannot claim ignorance by saying: “The computer made me do it.” As experts, they should have the skills to determine fact from fiction. Equally, responsible HEIs need to develop students with the skills to be good digital citizens.

Embrace the pace of AI

Overall, these discussions of the ethical and social implications of the use of AI will recur frequently due to its pace of change. Advancements in AI will continue to disrupt the economy and reframe future jobs – similar to the impact that personal computers had before them. We do not teach students to use a slide rule in a world of calculators.

With clear ethical guidelines still evolving, the task before HEIs is daunting but cannot be shirked. Returning to handwritten examinations is not the solution. Perhaps we need to incorporate digital ethics into our practice-based learning approaches to better equip our students?

Micheal Axelsen is senior lecturer in business information systems at the UQ Business School and programme leader for the Master of Commerce, and Suzanne Bonner is a lecturer in the School of Economics and programme leader for the Master of Economics; both at the University of Queensland.

If you found this interesting and want advice and insight from academics and university staff delivered direct to your inbox each week, sign up for the THE Campus newsletter.

comment